|

|

Robots File: Disallow URLs and Sitemaps Autodiscovery

Easily create create robots.txt files that contain disallow instructions and references to generated XML sitemaps for autodiscovery.

Robots.txt File Introduction

Ever since the beginning of internet and search engines, the robots.txt file

has been how website owners and their webmasters could tell search engine crawlers like GoogleBot

which pages and content should be ignored and left out of search results.

This was the situation for many years until Google created Google Sitemaps / XML Sitemaps Protocol.

The new functionality was called Sitemaps Autodiscovery and included additional instructions for the robots.txt file that made it possible to point search engines to your XML sitemaps. This made is possible for search engines to more easily discover all your XML sitemap files.

This was the situation for many years until Google created Google Sitemaps / XML Sitemaps Protocol.

The new functionality was called Sitemaps Autodiscovery and included additional instructions for the robots.txt file that made it possible to point search engines to your XML sitemaps. This made is possible for search engines to more easily discover all your XML sitemap files.

Complete Robots.txt File Example

If you intend to manually create and/or edit robots.txt

files, you can see a complete example here with disallow of URLs

and XML Sitemaps Autodiscovery.

See below for more information.

|

User-agent: *

Disallow: /home/feedback.php Disallow: /home/link-us.php? Disallow: /home/social-bookmark.php? Sitemap: http://www.example.com/sitemap.xml Sitemap: http://www.example.com/sitemap-blog.xml |

See below for more information.

Robots Disallow URLs with Sitemapper "List" and "Analysis" Filters

If you want our sitemap builder to create your robots.txt file,

you will need to read the help about configuring

output

and

analysis

filters.

Note: It is only standard path filters that are added to robots.txt file, i.e. filters starting with a single : colon.

From sitemap generator tool tip:

Note: It is only standard path filters that are added to robots.txt file, i.e. filters starting with a single : colon.

From sitemap generator tool tip:

Text string matches: "mypics". Path relative to root: ":mypics/", subpaths only: ":mypics/*", regex search: "::mypics[0-9]*/"

Robots Text File and XML Sitemaps Autodiscovery

In 2007 it became possible to indirectly submit XML sitemaps

to search engines by listing listing them in robots.txt file.

This concept is called XML sitemaps autodiscovery, and it is part of the XML sitemaps protocol.

To add XML sitemaps autodiscovery to a robots.txt file,

add the fully qualified XML sitemap file path like this: Sitemap: http://www.example.com/sitemap.xml.

Below, we have listed some complete examples using XML sitemaps autodiscovery in robots.txt file:

If you created multiple XML sitemap files covering different parts of your website:

Or refer to the XML sitemap index file instead that links all XML sitemap files:

Some URLs for more information about robots text file:

Below, we have listed some complete examples using XML sitemaps autodiscovery in robots.txt file:

If you created multiple XML sitemap files covering different parts of your website:

|

User-agent: *

Sitemap: http://www.example.com/sitemap.xml Sitemap: http://www.example.com/sitemap-1.xml |

Or refer to the XML sitemap index file instead that links all XML sitemap files:

|

User-agent: *

Sitemap: http://www.example.com/sitemap-index.xml |

Some URLs for more information about robots text file:

- Official sitemaps documentation about robots file and autodiscovery.

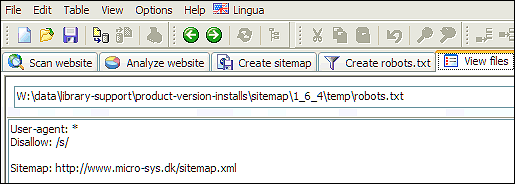

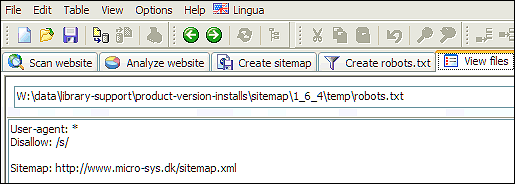

Create Robots Text File with Sitemap Generator

Sitemap generator can create robots.txt files.

Generated robots.txt files are ready to be uploaded and used by search engines.

Generated robots.txt files are ready to be uploaded and used by search engines.

Robots File and Cross Submit Sitemaps for Multiple Websites

In the beginning, and for a long time after that, it was not possible to submit sitemaps for websites unless

the sitemaps were hosted and located on the same domain as the websites.

However, now some search engines include support for another way of managing sitemaps across multiple sites and domains. The requirement is that you need to verify ownership of all websites in Google Search Console or similar depending on the search engine.

To learn more see:

However, now some search engines include support for another way of managing sitemaps across multiple sites and domains. The requirement is that you need to verify ownership of all websites in Google Search Console or similar depending on the search engine.

To learn more see:

- Sitemaps protocol: Cross sitemaps submit and manage using robots.txt.

- Google: More website verification methods than sitemaps protocol defines.